The following article is Chapter Three of a book entitled Finishing The Rat Race. All previously uploaded chapters are available (in sequence) by following the link above or from category link in the main menu, where you will also find a table of contents and a preface on why I started writing it.

*

What a piece of work is a man!

— William Shakespeare 1

*

Almost two decades ago, as explosions lit up the night sky above Baghdad, I was at my parents’ home in Shropshire, sat on the sofa, and watching the rolling news coverage. After a few hours we were still watching the same news though for some reason the sound was now off and the music system on.

“It’s a funny thing,” I remarked, between sips of whisky, and not certain at all where my words were leading, “that humans can do this… and yet also… this.” I suppose that I was trying to firm up a feeling. A feeling that arose in response to the unsettling juxtaposition of images and music, and that involved my parents and myself in different ways, as detached spectators. But my father didn’t understand at first, and so I tried again.

“I mean how can it be,” I hesitated, “that on the one hand we are capable of making such beautiful things like music, and yet on the other, we are the engineers of such appalling acts of destruction?” Doubtless I could have gone on elaborating, but there was no need. My father understood my meaning, and the evidence of what I was trying to convey was starkly before us – human constructions of the sublime and the atrocious side-by-side.

In any case, the question, being as it is, a question of unavoidable and immediate importance to all of us, sort of hangs in the air perpetually, although as a question, it is usually considered and recast in alternative ways – something I shall return to – while mostly it remains not merely unanswered, but unspoken. We treat it instead like an embarrassing family secret, which is best forgotten. Framed hesitantly but well enough for my father to reply, his answer was predictable too: “that’s human nature”; which is the quick and easy answer although it actually misses the point entirely – a common fallacy technically known as ignoratio elenchi. For ‘human nature’ in no way provides an answer but simply opens a new question. Just what is human nature? – This is the question.

The generous humanity of music and the indiscriminate but cleverly conceived cruelty of carpet bombing are just different manifestations of what human beings are capable of, and thus of human nature. If you point to both and say “this is human nature”, well yes –and obviously there’s a great deal else besides – whereas if you reserve the term only for occasions when you feel disapproval, revulsion or outright horror – as many do – then your condemnation is simply another feature of “human nature”. In fact, why do we judge ourselves at all?

So this chapter represents an extremely modest attempt to grapple with what is arguably the most complex and involved question of all questions. Easy answers are good when they cut to the bone of a difficult problem, however to explain man’s inhumanity to man as well as to his other fellow creatures, surely deserves a better and fuller account than that man is by nature inhumane – if for no other reason than that the very word ‘human’ owes its origins to the earlier form ‘humane’! Upon this etymological root is there really nothing else but vainglorious self-deception and wishful thinking? I trust that language is in truth less consciously contrived.

The real question then is surely this: When man becomes inhumane, why on this occasion or in this situation, but not on all occasions and under all circumstances? And how come we still use the term ‘inhumane’ at all, if being inhumane is so hard-wired into our human nature? The lessons to be learned by tackling such questions can hardly be overstated; lessons that might well prove crucial in securing the future survival of our societies, our species, and perhaps of the whole planet.

*

I Monkey business

“There are one hundred and ninety-three living species of monkeys and apes. One hundred and ninety-two of them are covered with hair.”

— Desmond Morris 2

*

The scene: just before sunrise about one million years BC, a troop of hominids are waking up and about to discover a strange, rectangular, black monolith that has materialised from nowhere. As the initial excitement and fear of this strange new object wears off, the hominids move closer to investigate. Attracted perhaps by its remarkable geometry, its precise and unnatural blackness, they reach out tentatively to touch it and then begin to stroke it.

As a direct, though unexplained consequence of this communion, one of the ape-men has a dawning realisation. Sat amongst the skeletal remains of a dead animal, he picks up one of the sun-bleached thigh bones and begins to swing it about. Aimless at first, his flailing attempts simply scatter the other bones of the skeleton. In time, however, he gains control and his blows increase in ferocity, until at last, with one almighty thwack, he manages to shatter the skull to pieces. It is a literally epoch-making moment of discovery.

The following day, mingling beside a water-hole, a fight breaks out. His new weapon in hand, our hero deals a fatal blow against the alpha male of a rival troop. Previously at the mercy of predators and reliant on scavenging to find their food, the tribe can now be freed from fear and hunger too. Triumphant, he is the ape-man Prometheus, and in ecstatic celebration of this achievement, he tosses the bone high into the air, whereupon, spinning up and up, higher and higher into the sky, the scene cuts from spinning bone into an orbiting space-craft…

*

Stanley Kubrick’s 2001: A space odyssey is enigmatic and elusive. Told in a sequence of related if highly differentiated parts, it repeatedly confounds the viewer’s expectations – the scene sketched above is only the opening act to Kubrick’s seminal science-fiction epic.

Kubrick said “you are free to speculate as you wish about the philosophical and allegorical meaning of the film” 3 So taking Kubrick at his word, I shall do just that – although not for every aspect of the film, but specifically for his first scene, up to and including that most revered and celebrated ‘match cut’ in cinema history, and its relationship to Kubrick’s mesmerising and seemingly bewildering climax: moments of transformation, when reality per se is re-imagined. Although on one level, at least, all of the ideas conveyed in this opening as well as the more mysterious closing scenes (more below) are abundantly clear. For Kubrick’s exoteric message involves the familiar Darwinian interplay between the foxes and the rabbits and their perpetual battle for survival, which is the fundamental driving force behind the evolutionary development of natural species.

Not that Darwin’s conception should to be misunderstood as war in the everyday sense, however, although this is a very popular interpretation; for one thing the adversaries in these Darwinian arm races, most often predator and prey, in general remain wholly unaware of any escalation in armaments and armour. Snakes, for example, have never sought to strengthen their venom, any more than their potential victims, most spectacularly the opossums that evolved to prey on them, made any conscious attempts to hone their blood-clotting agents. Today’s snake-eating opossums have extraordinary immunity to the venom of their prey purely because natural selection strongly favoured opossums with heightened immunity.

Of course, the case is quite different when we come to humankind. For it is humans alone who deliberately escalate their methods of attack and response and do so by means of technology. To talk of an “arms race” between species is therefore a somewhat clumsy metaphor for what actually occurs in nature – although Darwin is accurately reporting what he finds.

And there is another crucial difference between the Darwinian ‘arms race’ and the human variant. Competition between species is not always as direct as between predator and prey, and frequently looks nothing like a war at all. Indeed, it is more often analogous to the competitiveness of two hungry adventurers lost in a forest. For it may well be that both of our adventurers are completely unaware that somewhere in the midst of the forest there is a hamburger left on a picnic table. While neither adventurer may be aware of the presence of the other, yet they are – at least in a strict Darwinian sense – in competition, since if either one stumbles accidentally upon the hamburger, it happens that, and merely by process of elimination, the other has lost his chance of a meal. As competitors then, the faster walker, or the one with keener eyes, or the one with greatest stamina, will gain a very slight but significant advantage on the other. Thus, perpetual competition between individuals need never amount to war, or even to battles, and this is how Darwin’s ideas are properly understood.

In any case, such contests of adaptation, whether between predators and prey, or sapling trees racing towards the sunlight, can never actually be won. The rabbits may get quicker but the foxes must get quicker too, since if either species fails to adapt then it will not survive long. So it’s actually a perpetual if dynamic stalemate, with species trapped like the Red Queen in Alice Through the Looking-Glass, always having to keep moving ahead just to hold their ground – a paradox that evolutionary biologists indeed refer to as “the red queen hypothesis” 4.

We might still judge that both sides are advancing, since there is, undeniably, a kind of evolutionary progress, with the foxes growing craftier as the rabbits get smarter too, and so we might conclude that such an evolutionary ‘arms race’ is the royal road to all natural progress – although Darwin noted that other evolutionary pressures including, most notably sexual selection, has tremendous influence as well. We might even go further by extending the principle in order to admit our own steady technological empowerment, viewed objectively as being a by-product of our own rather more deliberate arms race. Progress thus assured by the constant and seemingly inexorable fight for survival against hunger and the elements, and no less significantly, by the constant squabbling of our warring tribes over land and resources.

Space Odyssey draws deep from the science of Darwinism, and spins a tale of our future. From bony proto-tool, slowly but inexorably, we come to the mastery of space travel. From terrestrial infants, to cosmically-free adults – this is the overarching story of 2001. But wait, there’s more to that first scene than immediately meets the eye. That space-craft which Kubrick cuts to; it isn’t just any old space-craft…

Look quite closely and you might see that it’s actually one of four space-craft, similar in design, which form the components of an orbiting nuclear missile base, and though in the film this is not as clear as in Arthur C. Clarke’s parallel version of the story (the novel and film were co-creations written side-by-side), the missiles are there if you peer hard enough.

So Space Odyssey is, at least on one level, the depiction of technological development, which, though superficially from first tool to more magnificent uber-tool (i.e., the spacecraft), is also – and explicitly in the novel – a development from the first weapon to what is, up to now, the ultimate weapon, and thus from the first hominid-cide to the potential annihilation of the entire human population. 5

Yet 2001, the year in the title, also magically heralds a new dawn for mankind: a dawn that, as with every other dawn, bursts from the darkest hours. The meaning therefore, as far as I judge it, is that we, as parts of nature, are born to be both creators and destroyers; agents of light and darkness. That our innate but unassailable evolutionary drive, dark as it can be, also has the potential to lead us to the film’s weirdly antiseptic yet quasi-mystical conclusion, and the inevitability of our grandest awakening – a cosmic renaissance as we follow our destiny towards the stars.

Asked in an interview whether he agreed with some critics who had described 2001 as a profoundly religious film, Kubrick replied:

“I will say that the God concept is at the heart of 2001—but not any traditional, anthropomorphic image of God. I don’t believe in any of Earth’s monotheistic religions, but I do believe that one can construct an intriguing scientific definition of God, once you accept the fact that there are approximately 100 billion stars in our galaxy alone, that its star is a life-giving sun and that there are approximately 100 billion galaxies in just the visible universe.”

Continuing:

“When you think of the giant technological strides that man has made in a few millennia—less than a microsecond in the cosmology of the universe—can you imagine the evolutionary development that much older life forms have taken? They may have progressed from biological species, which are fragile shells for the mind at best, into immortal machine entities—and then, over innumerable eons, they could emerge from the chrysalis of matter transformed into beings of pure energy and spirit. Their potentialities would be limitless and their intelligence ungraspable by humans.”

When the interviewer pressed further, inquiring what this envisioned cosmic evolutionary path has to do with the nature of God, Kubrick added:

“Everything—because these beings would be gods to the billions of less advanced races in the universe, just as man would appear a god to an ant that somehow comprehended man’s existence. They would possess the twin attributes of all deities—omniscience and omnipotence… They would be incomprehensible to us except as gods; and if the tendrils of their consciousness ever brushed men’s minds, it is only the hand of God we could grasp as an explanation.” 6

Kubrick was an atheist although unlike many atheists he acknowledged the religious impulse is an instinctual drive no less irrepressible than our hungers to eat and to procreate. This is so because at the irreducible heart of religion lies pure transcendence: the climbing up and beyond ordinary states of being. This desire to transcend whether by shamanic communion with the ancestors and animalistic spirits, monastic practices of meditation and devotion, or by brute technological means is something common to all cultures.

Thus the overarching message in 2001 is firstly that human nature is nature, for good and ill, and secondly that our innate capacity for reason will inexorably propel us to transcendence of our terrestrial origins. In short, it is the theory of Darwinian evolution writ large. Darwinism appropriated and repackaged as an updated creation story – a new mythology and surrogate religion that lends an alternative meaning of life. We will cease to worship nature or humanity, which is nature, it says, and if we continue to worship anything at all, our new icons will be representative only of Progress (capital P). Thus, evolution usurps god! Of course, the symbolism of 2001 can be given esoteric meaning too – indeed, there can never be a final exhaustive analysis of 2001 because like all masterpieces the full meaning is open to an infinitude of interpretations – and this I leave entirely for others to speculate upon.

In 1997, Arthur C. Clarke was invited by the BBC to appear on a special edition of the documentary series ‘Seven Wonders of the World’ (Season 2):

*

I have returned to Darwin just because his vision of reality has become the accepted one. And by acknowledging that human nature is indeed another natural outgrowth, it is always tempting to look to Darwin for answers. However, as I touched upon in the previous chapter, though Darwinism as biological mechanism is extremely well-established science, interpretations that follow from those established evolutionary principles differ, and this is especially the case when we try to make sense of patterns of animal behaviour: how much stress to place on our own innate biological drives remains an even more hotly contested matter. But if we are to adjudicate fairly on this point then it is worthwhile first to consider how Darwin’s own ideas had originated and developed.

In fact, as with all great scientific discoveries, we can trace a number of precursors including the nascent theory of his grandfather Erasmus, a founder member of the Lunar Society, who wrote lyrically in his seminal work Zoonomia:

“Would it be too bold to imagine, that in the great length of time, since the earth began to exist, perhaps millions of ages before the commencement of the history of mankind, would it be too bold to imagine, that all warm-blooded animals have arisen from one living filament, which THE GREAT FIRST CAUSE endued with animality, with the power of acquiring new parts, attended with new propensities, directed by irritations, sensations, volitions, and associations; and thus possessing the faculty of continuing to improve by its own inherent activity, and of delivering down those improvements by generation to its posterity, world without end!” 7

So doubtless Erasmus sowed the seeds for the Darwinian revolution, although his influence alone does not account for Charles Darwin’s central tenet that it is “the struggle for existence” which provides, as indeed it does, one plausible and vitally important mechanism in the process of natural selection, and thus, a key component in his complete explanation for the existence of such an abundant diversity of species. But again, what caused Charles Darwin to suspect that “the struggle for existence” necessarily involved such “a war of all against all” to begin with?

In fact, Darwin had borrowed this idea of “the struggle for existence”, a phrase that he uses as his title heading chapter three of The Origin of Species, directly from Thomas Malthus 8. And interestingly, Alfred Russell Wallace, the less remembered co-discoverer of evolutionary natural selection, who had reached his own conclusions entirely independently of Darwin’s work, was also inspired in part by thoughts of this same concept, which though ancient in origin was already widely attributed to Malthus.

However, the notion of “a war of all against all” traces back still further, at least as far back as the English Civil War, and to the writings of highly influential political philosopher, Thomas Hobbes. 9

So it is indirectly from the writings of these two redoubtable Thomases that much our modern thinking about Nature and therefore, by extension, about human nature, has drawn upon. It is instructive therefore to examine the original context from which the formation and development of Hobbes and Malthus’s own ideas occurred; contributions that have been crucial to the evolution not only of evolutionary thinking, but foundational to the development of post-enlightenment western civilisation. To avoid too much of a digression, I have decided to leave further discussion of Malthus and his continuing legacy for the addendum below, and to focus attention here solely on the thoughts and influence of Hobbes. But to get to Hobbes, who first devoted his attention to the study of the natural sciences and optics in particular, I’d like to begin with a brief diversion by way of my own subject, Physics.

*

The title of Thomas Pynchon’s most celebrated novel Gravity’s Rainbow published in 1973 darkly alludes to the ballistic flight path of Germany’s V2 rockets that fell over London during the last days of the Second World War. Pynchon was able to conjure up this provocative metaphor because by the time of the late twentieth century everyone knew perfectly well and seemingly from their own direct experience, that projectiles follow a symmetrical and parabolic arc. It is strange to think, therefore, that for well over a millennium people in the western world, including the most scholarly among them, had falsely believed that motion followed a set of quite different laws, presuming the trajectory of a thrown object, rather than following any sweeping arc, must be understood instead as comprised of two quite distinct phases.

Firstly, impelled upwards by a force the object was presumed to enter a stage of “unnatural motion” as it climbed away from the earth’s surface – its natural resting place – before eventually running out of steam, and then abruptly falling back to earth under “natural motion”. This is indeed a common sense view of motion – the view that every child can instantly recognise and immediately comprehend – although as with many common sense views of the physical world, it is absolutely wrong.

As a rather striking illustration of scientific progress, this shift in modern understanding was brought to my attention by a university professor who had worked it into an unforgettable demonstration that kicked off his lecture on error analysis. On the blackboard he first sketched out the two competing hypotheses: a beautifully smooth arc captioned ‘Galileo’ and then to the left of it, a pair of disconnected arrows indicating diagonally up and then vertically down labelled ‘Aristotle’. Obviously Galileo was about to win, but then came the punchline as he pulled out a balloon, slapped it at an approximate angle of forty-five degrees before we all watched it drift back to earth just as Aristotle would have predicted! With tremendous glee he then chalked an emphatic cross to dismiss Galileo’s model, before spelling out the message (if you didn’t understand) that above and beyond all the other considerations, it is essential to design your experiment and carry out observations with due care! 10

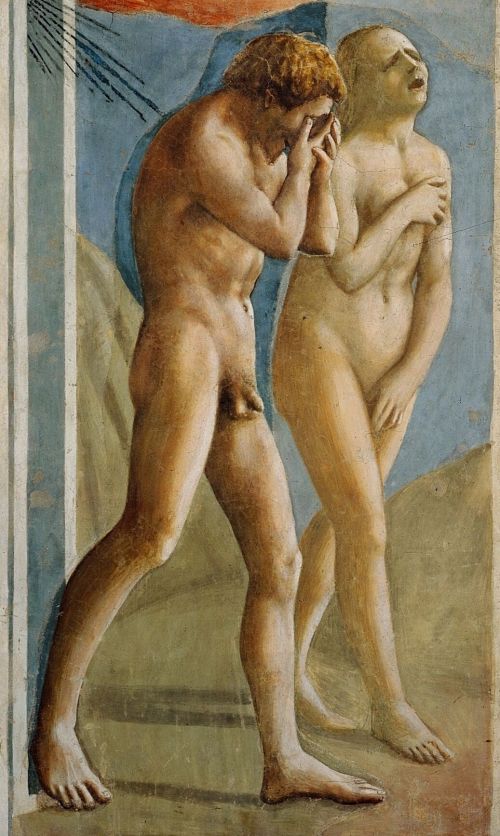

Now, legend tells us that Newton was sitting under an apple tree in his garden, unable to fathom what force could maintain the earth in its orbit around the sun, when all of a sudden an apple fell and hit him on the head. And if this is a faithful account of Newton’s Eureka moment, then the accidentally symbolism is striking. I might even venture to suggest that by implication it was this fall of Newton’s apple that redeemed humanity; snapping Newton and by extension all humanity spontaneously out of darkness and into an Age of Reason. For if expulsion from Eden involved eating an apple, symbolically at least, Newton’s apple paved the way for a new golden age. Or, as poet Alexander Pope wrote so exuberantly: “Nature and Nature’s laws lay hid in night: God said, Let Newton be! and all was light.” 11

Of course Newton’s journey into light had not been a solo venture, and as he said himself, “if I have seen further, it is by standing on the shoulders of giants.” 12

These predecessors and contemporaries whom Newton implicitly pays homage to would include Descartes, Huygens, and Kepler, although the name that stands tallest today is Galileo of course. For it was Galileo’s observations and insights that led more or less ineluctably to what today are called Newton’s Laws, and in particular Newton’s First Law, which states (in various formulations) that objects remain in uniform motion or at rest unless acted upon by a force.

This deceptively simple law has many surprising consequences. For instance, it means that when we see an object moving faster and faster or else slower and slower or – and this is an important point – changing its direction of motion, then we can deduce there must be a force impelling it. It also follows that there is a requirement for a force to arc the path of the earth about the sun, and, likewise, one causing the moon to revolve about the earth; hence gravity. Conversely, if an object is at rest (or moving in a straight line at constant speed – the law makes no distinction) then we know the forces acting on it must be balanced in such a way as to cancel to zero. Thus, we can tell purely from any object’s motion whether the forces acting on it are ‘in equilibrium’ or not.

An alternative way of thinking about Newton’s First Law requires the introduction of a related idea called ‘inertia’. This is the ‘reluctance’ of every object to change its motion, and, it transpires that the more massive the object, the greater its inertia – so here I am paraphrasing Newton’s Second Law. Given a situation in which there are no forces acting (so no resistive forces like friction or drag) then according to this law the object must travel continually with unchanging velocity. This completely counterintuitive discovery was arguably Galileo’s finest achievement and it is the principle that permits modern hyperloop technology – high speed maglev trains that run without friction through vacuum tunnels. It also permitted Galileo’s understanding of how the earth could revolve indefinitely around the sun and oddly without us ever noticing.

Where others had falsely presumed that the birds would get left behind if the earth was in motion, Galileo saw that the earth’s moving platform was no different in principle from a travelling ship, and that, just like onboard a ship, nothing will be left behind as it travels forward – this is easier to envisage if you imagine sitting on a train and recall how it feels at constant speed if the rails are smooth, such that you sometimes cannot even tell whether the train you are on or the one on the other platform is moving.

Of course, when Galileo insisted on a heliocentric reality, he was directly challenging papal authority and paid the inevitable price for his impertinence. Moreover, when he implored his opponents merely to look through his own telescope and see for themselves, they simply declined his honest invitation. Which is simply the nature of belief – not just religious variants but all forms – for such ‘confirmation bias’ lies deep within our nature, causing most of us to have little desire to make new discoveries or learn new facts if ever these threaten to disrupt our hard-won opinions on matters of central concern.

So finally the Inquisition in Rome tried him, and naturally enough they found him guilty, sentencing Galileo to lifelong house arrest with a strict ban on publishing his ideas. Given the age, this was comparatively lenient; two decades earlier the Dominican friar and philosopher Giordano Bruno, who amongst other blasphemies had dared to suggest the universe had no centre and that the stars were just other suns surrounded by planets of their own, was burned at the stake.

Today, our temptation is to regard the Vatican’s hostility to Galileo’s new science as a straightforward attempt to deny the reality purely because it devalues the Biblical story which places not just earth, but the holy city of Jerusalem at the centre of the cosmos. However, Galileo’s heresy actually strikes a more fundamental blow, since it challenges not only papal infallibility but the entire millennium-long Scholastic tradition – the tripartite dialectical synergy of Aristotle, Neoplatonism and Christianity – and by extension, the whole hierarchical establishment of the late medieval period and much more.

Prior to Galileo, as my professor illustrated so expertly with his hilarious balloon demonstration, the view had endured that all objects obeyed laws according to their inherent nature. Thus, rocks fell to earth because they were by nature ‘earthly’, whereas the sun and moon remained high above us because they were made of altogether more heavenly stuff. In short, things back then knew their place.

By contrast, Galileo’s explanation is startlingly egalitarian. Since according to his radical reinterpretation, not only do all things obey common laws – ones that apply no less resolutely to the great celestial bodies as to everyday sticks and stones. But longer impelled by their inherent nature – a living essence – everything is instead directed always and absolutely by blind external forces.

At a stroke the universe was reduced to base mechanics; the deepest intricacies of the stars and the planets (once gods) entirely akin to elaborate mechanisms. At a stroke, it is fair to say not only that Galileo had levelled all stuff, but in the process he effectively killed the cosmos; all stuff being compelled to obey the same laws because all stuff is inherently inert.

Now if Newton’s apple is a reworking of the Fall of Man as humanity’s redemption through scientific progress, then the best-known fable of Galileo (since the tale itself is again wholly apocryphal), is how he had once instructed an assistant to drop cannon balls of differing sizes from the Leaning Tower of Pisa in order to test how objects fell to earth, observing that they landed together simultaneously on the grass below.

In fact, this experiment was recreated by Apollo astronauts up on the moon’s surface where without the hindrance of any atmosphere, it was indeed observed that objects as remarkably different as a hammer and a feather will truly accelerate at the same rate, landing in the dust at precisely the same instant. This same experiment is also one I have also repeated in class, stood on a desk and surrounded by bemused students, who unfamiliar with the principle, are reliably astonished; since intuitively we all believe that the heavier weights must fall faster.

But digressions aside, the important point is this: Galileo’s thought experiment invokes a different Biblical reference. It is in fact a parable of sorts, reminding us all not to jump to unscientific assumptions and instead always “to do the maths”. And in common with Newton’s apple it recalls a myth from Genesis; in this case the Tower of Babel story, which was an architectural endeavour supposedly conceived at a time when the people of the world had been united and wished to build a short-cut to heaven. Afterwards, God decided to punish us all (as he likes to do) with a divide and conquer strategy; our divided nations additionally confused by the introduction of a multiplicity of languages. But then along came Galileo to unite us once more with his own gift, the universal application of a universal language called mathematics. For as he wrote:

Philosophy is written in this grand book, which stands continually open before our eyes (I say the ‘Universe’), but cannot be understood without first learning to comprehend the language and know the characters as it is written. It is written in mathematical language, and its characters are triangles, circles and other geometric figures, without which it is impossible to humanly understand a word; without these one is wandering in a dark labyrinth. 13

*

Thomas Hobbes was very well studied in the works of Galileo, and on his travels around Europe in the mid 1630s he may very well have visited the great man in Florence. 14 In any case, Hobbes fully adopts Galileo’s mechanistic conception of the universe and draws what he sees as its logical conclusion, interpolating from what is true for external nature and determining that this must also be true of human nature – a step Galileo never ventured.

All human actions, Hobbes posits, whether voluntary or involuntary, are the direct outcomes of physical bodily processes occurring inside our organs and muscles. 15 Of the precise mechanisms, he ascribes the origins to “insensible” actions that he calls “endeavours”; something he leaves for physiologists to study and comprehend. 16

Fleshing out this bio-mechanical model, Hobbes next explains how all human motivations – which he calls ‘passions’ – must necessarily function likewise on the basis of these material processes, are thereby similarly reducible to forces of attraction and repulsion; in his own terms ‘appetites’ and ‘aversions’. 17

In the manner of elaborate machines, Hobbes says, humans operate in accordance with responses that entail either the automatic avoidance of pain or the increase of pleasure; the manifestation of apparent ‘will’ being nothing more than our overarching ‘passion’ of all these lesser ‘appetites’. Concerned solely with improving his lot, Man, he concludes, is inherently ‘selfish’.

Having presented his strikingly modern conception of life as a whole and human nature more particularly, Hobbes next considers what he calls “the natural condition of mankind” (or ‘state of nature’) and this in turn leads him to consider why “there is always war of everyone against everyone”:

Whatsoever therefore is consequent to a time of War, where every man is Enemy to every man; the same is consequent to the time, wherein men live without other security, than what their own strength, and their own invention shall furnish them withall. In such condition, there is no place for Industry; because the fruit thereof is uncertain; and consequently no Culture of the Earth; no Navigation, nor use of the commodities that may be imported by Sea; no commodious Building; no Instruments of moving, and removing such things as require much force; no Knowledge of the face of the Earth; no account of Time; no Arts; no Letters; no Society; and which is worst of all, continual fear, and danger of violent death; And the life of man, solitary, poor, nasty, brutish, and short. 18

According to Hobbes, this ‘state of nature’ becomes inevitable whenever our laws and social conventions cease to function and no longer protect us from our otherwise fundamentally rapacious selves. Once civilisation gives way to anarchy, then anarchy, according to Hobbes, is inevitable hell because our automatic drive to improve our own situation comes into immediate conflict with every other individual. To validate this claim, Hobbes then reminds us of the fastidious counter measures everyone takes to defend against their fellows:

It may seem strange to some man, that has not well weighed these things; that Nature should thus dissociate, and render men apt to invade, and destroy one another: and he may therefore, not trusting to this Inference, made from the Passions, desire perhaps to have the same confirmed by Experience. Let him therefore consider with himself, when taking a journey, he arms himself, and seeks to go well accompanied; when going to sleep, he locks his doors; when even in his house he locks his chests; and this when he knows there be Laws, and public Officers, armed, to revenge all injuries shall be done him; what opinion he has of his fellow subjects, when he rides armed; of his fellow Citizens, when he locks his doors; and of his children, and servants, when he locks his chests. Does he not there as much accuse mankind by his actions, as I do by my words? 19

Hobbes is not making any moral judgment here, since he regards all nature, drawing no special distinctions for human nature, as equally compelled by these self-same ‘passions’ and so in his conceived ongoing war of all on all, objectively the world he sees is value neutral. As he continues:

But neither of us accuse mans nature in it. The Desires, and other Passions of man, are in themselves no Sin. No more are the Actions, that proceed from those Passions, till they know a Law that forbids them; which till Laws be made they cannot know: nor can any Law be made, till they have agreed upon the Person that shall make it. 20

We might conclude indeed that all’s fair in love and war because fairness isn’t the point, at least according to Hobbes. What matters here are the consequences of actions, and so Hobbes’ stance is surprisingly modern.

Nevertheless, Hobbes wishes to ameliorate the flaws he perceives in human nature, in particular those born of selfishness, by constraining behaviour to accord with what he deduces to be ‘laws of nature’: precepts and general rules found out by reason. This, says Hobbes, is the only way to overcome what is otherwise man’s sorry state of existence in which a perpetual war of all against all otherwise ensures everyone’s life is “nasty, brutish and short”. Thus to save us from a dreadful ‘state of nature’ he demands conformity to more reasoned ‘laws of nature’ – in spite of the seeming contradiction!

In short, not only does Hobbes’ prognosis speak to the urgency of securing a social contract, but his whole thesis heralds our bio-mechanical conception of life and of the evolution of life. Indeed, following from the tremendous successes of the physical sciences, Hobbes’ radical faith in materialism, which must have been extremely shocking to his contemporaries, has gradually come to seem commonsensical; so much so that its overlooked presumptions led philosopher Karl Popper to coin the phrase “promissory materialism”: adherents to the physicalist view casually relegating concerns about gaps in understanding as problems to be worked out in future – just as Hobbes does, of course, when he delegates the task of comprehending all human actions and ‘endeavours’ to the physiologists.

*

But is it really is the case, as Hobbes concludes, that individuals can be restrained from barbarism only by laws and social contracts? If so, then we might immediately wonder why acts of indiscriminate murder and rape are comparatively rare crimes given how these are amongst the toughest crimes of all to foil or to solve. By contrast, most people, most of the time, appear to prefer not to commit everyday atrocities, and it would be odd to suppose that they refrain purely because they fear arrest and punishment. Everyday experience tells us instead that most people don’t really have much inclination for committing violence or other acts of grievous criminal intent.

Moreover, if we look for supporting evidence of Hobbes’ conjecture then we can actually find an abundance that also refutes him. We know for instance that the appalling loss of life during the last world war would have been far greater still if it were not for a very deliberate lack of aim amongst the combatants. A lack of zeal for killing even during the heat of battle turns out to be the norm as US General S. L. A. Marshall learned from firsthand accounts gathered at the end of the war when he debriefed thousands of returning GIs in efforts to learn more about their combat experiences. 21 What he heard was almost too incredible: not only had three-quarters of combatants never actually fired at the enemy – not even when coming under direct fire themselves – but amongst those who did shoot a tiny two-percent had trained their weapons to kill the enemy.

Nor is this lack of bloodlust a modern phenomenon. At the end of Battle of Gettysburg during the American Civil War, the Union Army collected up the tens of thousands of weapons and discovered that the vast majority were still fully loaded. Indeed, more than half of the rifles had multiple loads – one had an incredible 23 loads packed all the way up the barrel. 22 Many of these soldiers had never actually pulled the trigger; the majority preferring to feign combat rather literally than fire off shots.

It transpires that contrary to the depictions of battles in Hollywood movies, by far the majority of servicemen take no pleasure at all in killing one another. Modern military training from Vietnam onwards has even developed methods to compensate for the ordinary lack of ruthlessness: heads are shaven, identities stripped, and conscripts are otherwise desensitised, turning men into better machines for war.

But then, if there is one day in history more glorious than any other surely it has to be the Christmas Armistice of 1914. The war-weary and muddied troops huddling for warmth in no-man’s land, sharing food, singing carols together, before playing the most beautiful games of football ever played: such outpourings of sanity in the face of lunacy that no movie screenplay could reinvent. Indeed, it takes artistic genius even to render such scenes of universal comradeship and brotherhood as anything other than sentimental and clichéd, and yet they happened nonetheless.

*

In his autobiography Hobbes relates that his mother’s shock on hearing the news of the approaching Spanish Armada had induced his premature birth, famously saying: “my mother gave birth to twins: myself and fear.” Doing his utmost to avoid getting caught up in the tribulations of the English Civil War, Hobbes lived through exceptionally fearful times, and doubtless this accounts for why his political theory reads like a reaction and an intellectual response to fear. But fear produces monsters and Hobbes’ solution to societal crisis involves an inbuilt tolerance for tyranny. In fact Hobbes understood perfectly well that the power to protect is derived from the power to terrify; indeed to kill.

In response, Hobbes manages to conceive of a system of government whose authority is sanctioned – indeed sanctified – through terrifying its subjects to consent to their own subjugation. On this same Hobbesian basis, if a highwayman demands “your money or your life?” by agreeing you are likewise entered into a contract! In short, this is government by way of protection racket; Hobbes’ keenness for an overarching unassailable but (hopefully) benign dictatorship perhaps best captured by the absolute power he grants the State right down to the foundational level of determining morality as such:

I observe the Diseases of a Common-wealth, that proceed from the poison of seditious doctrines; whereof one is, “That every private man is Judge of Good and Evil actions.” This is true in the condition of mere Nature, where there are no Civil Laws; and also under Civil Government, in such cases as are not determined by the Law. But otherwise, it is manifest, that the measure of Good and Evil actions, is the Civil Law… 23

Keeping in mind that for Hobbes every action proceeds from a mechanistic cause, it follows that the very concept of ‘freedom’ actually struck him as a logical fallacy. Indeed, as someone who professed to be able to square the circle 24 – which led to a notoriously bitter mathematical dispute with Oxford professor John Wallis – Hobbes explicit dismissal of ‘freedom’ is suitably fitting:

[W]ords whereby we conceive nothing but the sound, are those we call Absurd, insignificant, and Non-sense. And therefore if a man should talk to me of a Round Quadrangle; or Accidents Of Bread In Cheese; or Immaterial Substances; or of A Free Subject; A Free Will; or any Free, but free from being hindred by opposition, I should not say he were in an Error; but that his words were without meaning; that is to say, Absurd. 25

According to Hobbes then, freedom reduces absurdity – or to ‘a round quadrangle’! – a perspective that understandably opens the way for totalitarian rule: and perhaps no other thinker was ever so willing as Hobbes to trade freedom for the sake of security. But finally, Hobbes is mistaken, as a famous experiment carried out originally by psychologist Stanley Milgram – and since repeated many times – amply illustrates.

*

For those unfamiliar with Milgram’s experiment, here is the set up:

Volunteers are invited to what they are told is a scientific trial investigating the effects of punishment on learning. Having been separated into groups, they are then assigned the roles either of teachers and learners. At this point, the learner is strapped into a chair and fitted with electrodes before in an adjacent room the teacher is given control of apparatus that enables him or her to deliver electric shocks. In advance of this, the teachers are given a low voltage sample shock just to give them a taste of the punishment they are about to inflict.

The experiment then proceeds with the teacher administering electric shocks of increasing voltage which he or she must incrementally adjust to punish wrong answers. As the scale on the generator approaches 400V, a marker reads “Danger Severe Shock” and beneath the final switches there is simply XXX. Proceeding beyond this level evidently runs the risk of delivering a fatal shock, but in the experiment participants are encouraged to proceed nonetheless.

How, you may reasonably wonder, could such an experiment have been ethically sanctioned? Well, it’s a deception. All of the learners are actors, and their increasingly desperate pleading is as scripted as their ultimate screams. Importantly, however, the true participants (who are all assigned as ‘teachers’) are led to believe the experiment and the shocks are for real.

The results – repeatable ones, as I say – are certainly alarming: two-thirds of the subjects will go on to deliver what they are told are potentially fatal shocks. In fact, the experiment is continued until a teacher has administered three shocks at 450V level, by which time the actor playing the learner has stopped screaming and must therefore be presumed either unconscious or dead.

“The chief finding of the study and the fact most urgently demanding explanation”, Milgram wrote later, is that:

Ordinary people, simply doing their jobs, and without any particular hostility on their part, can become agents in a terrible destructive process. Moreover, even when the destructive effects of their work become patently clear, and they are asked to carry out actions incompatible with fundamental standards of morality, relatively few people have the resources needed to resist authority. 26

Milgram’s experiment has occasionally been misrepresented as some kind of proof of our innate human capacity for cruelty and for doing evil. But this was neither the object of the study nor the conclusion Milgram makes. The evidence instead led him to conclude that the vast majority take no pleasure in inflicting suffering, but that surprising numbers will carry on nevertheless when they have been placed under a certain kind of duress and especially when an authority figure is instructing them to do so:

Many of the people were in some sense against what they did to the learner, and many protested even while they obeyed. Some were totally convinced of the wrongness of their actions but could not bring themselves to make an open break with authority. They often derived satisfaction from their thoughts and felt that – within themselves, at least – they had been on the side of the angels. They tried to reduce strain by obeying the experimenter but “only slightly,” encouraging the learner, touching the generator switches gingerly. When interviewed, such a subject would stress that he “asserted my humanity” by administering the briefest shock possible. Handling the conflict in this manner was easier than defiance. 27

Milgram thought that it is this observed tendency for compliance amongst ordinary people that had enabled the Nazis to carry out their crimes and that led to the Holocaust. But his study might also account for why those WWI soldiers, even after sharing food and songs with the enemy, returned ready to fight on in the hours, days, weeks and years that followed the Christmas Armistice. While disobedience was severely punished, often with the ignominy of court martial and the terror of a firing squad, it is likely that authority alone would be persuasive enough to ensure compliance for many of those stuck in the trenches. Most people will follow orders no matter how horrific the consequences – this is Milgram’s abiding message.

In short, what Milgram’s study shows is that Hobbes’ solution is, at best, deeply misguided, because it is authoritarianism (his proposed remedy) that mostly leads ordinary humans to commit the worst atrocities. So Milgram offers us a way of considering Hobbes from a top down perspective: addressing the issue of how obedience to authority influences human behaviour.

But what about the bottom up view? After all, this was Hobbes’ favoured approach, since he very firmly believed (albeit incorrectly) that his own philosophy was solidly underpinned by pure mathematics – his grandest ambition had been to derive an entire philosophy that follows logically and is directly derived from the theorems of Euclid. Thus, according to Hobbes’ derived but ‘promissory materialism’, which sees Nature as wholly mechanistic and reduces actions to impulse, all animal behaviours – including human ones – are fully accountable and ultimately determined by, to apply a modern phrase, ‘basic instincts’. But again, is this actually true? What does biology have to say on the matter, and most specifically, what are the findings of those who most closely study real animal behaviour?

*

This chapter is concerned with words rather than birds…

So writes pioneering British ornithologist David Lack who devoted much of his life to the study of bird behaviour, conducting field work for four years while he also taught at Dartington Hall School in Devon; his spare-time spent observing populations of local robins; his findings delightfully written up in a seminal work titled straightforwardly The Life of the Robin. The passage I am about to quote follows on from the start of chapter fifteen in which he presents a thoughtful aside under the heading “A digression upon instinct”. It goes on:

A friend asked me how swallows found their way to Africa, to which I answered, ‘Oh, by instinct,’ and he departed satisfied. Yet the most that my statement could mean was that the direction finding of migratory birds is part of the inherited make-up of the species and is not the result of intelligence. It says nothing about the direction-finding process, which remains a mystery. But man, being always uneasy in the presence of the unknown, has to explain it, so when scientists abolish the gods of the earth, of lightning, and of love, they create instead gravity, electricity and instinct. Deification is replaced by reification, which is only a little less dangerous and far less picturesque.

Frustrated by the types of misunderstanding generated and perpetuated by misuse of the term ‘instinct’, Lack then ventures at length into the variety of ambiguities and mistakes that accompany it both in casual conversation or academic contexts; considerations that lead him to a striking conclusion:

The term instinct should be abandoned… Bird behaviour can be described and analysed without reference to instinct, and not only is the word unnecessary, but it is dangerous because it is confusing and misleading. Animal psychology is filled with terms which, like instinct, are meaningless, because so many different meanings have been attached to them, or because they refer to unobservables or because, starting as analogies, they have grown into entities. 28

When I first read Lack’s book I quickly fell under the spell of his lucid and nimble prose and marvelled at how the love for his subject was infectious. As ordinary as they may seem to us, robins live surprisingly complicated lives, and all of this was richly told, but what stood out most was Lack’s view on instinct: if its pervasive stink throws us off the scent in our attempts to study bird behaviour, then how much more alert must we be to its bearing on perceived truths about human psychology? Lack ends his own brief digression with a germane quote from philosopher Francis Bacon that neatly considers both:

“It is strange how men, like owls, see sharply in the darkness of their own notions, but in the daylight of experience wink and are blinded.” 29

*

The wolves of childhood were creatures of nightmares. One tale told of a big, bad wolf blowing your house down to eat you! Another reported a wolf sneakily dressing up as an elderly relative and climbing into bed. Just close enough to eat you! Still less fortunate was the poor duck in Prokofiev’s enchanting children’s suite Peter and the Wolf, swallowed alive and heard in a climatic diminuendo quaking from inside his belly. When I’d grown a little older, I also came to hear about stories of werewolves that sent still icier dread coursing down my spine…

I could go on and on with similar examples because wolves are invariably portrayed as rapacious and villainous throughout folkloric traditions across the civilised world of Eurasia, which is actually quite curious when you stop to think about it. Curious because wolves are not especially threatening to humans and wolf attacks are comparatively rare occurrences – while other large animals including bears, all of the big cats, sharks, crocodiles, and even large herbivores like elephants and hippos, pose a far greater threat to us. To draw an obvious comparison, polar bears habitually stalk humans, and yet rather than being terrifying we are taught to see them as cuddly. Evidently, our attitudes towards the wolf have been shaped, therefore, by factors other than the observed behaviour of wolves themselves.

So now let us consider the rather extraordinary relationship our species actually has with another large carnivore: man’s best friend and cousin of the wolf, the dog – and incidentally, dogs kill (and likely have always killed) a lot more people than wolves.

The close association between humans and dogs is incredibly ancient. Dogs are very possibly the first animal humans ever domesticated, becoming so ubiquitous that no society on earth exists that hasn’t adopted them. This adoption took place so long ago in prehistory that conceivably it may have played a direct role in the evolutionary development of our species; and since frankly we will never know the answers here, I feel free to speculate a little. So here is my own brief tale about the wolf…

One night a tribe was sat around the campsite finishing off the last of their meal as a hungry wolf secretly watched on. A lone wolf, and being a lone wolf, she was barely able to survive. Enduring hardship and eking out a precarious existence, this wolf was also longing for company. Drawn to the smell of the food and the warmth of the fire, this wolf tentatively entered the encampment and for once wasn’t beaten back with sticks or chased away. Instead one of the elders at the gathering tossed her a bone to chew on. The next night the wolf returned, and the next, and the next, until soon she was welcomed permanently as one of the tribe: the wolf at the door finding a new home as the wolf by the hearth.

As a story, it sounds plausible enough that something like it may have happened countless times perhaps and in many locations. Having enjoyed the company of the wolf, the people of the tribe later adopting her cubs (or perhaps it all began with cubs). In any case, as the wolves became domesticated they changed, and within just a few generations of selective breeding, had been fully transformed into dogs.

The rest of the story is more or less obvious too. With dogs, our ancestors enjoyed better protection and could hunt more efficiently. Dogs run faster, have far greater endurance, keener hearing and smell. Soon they became our fetchers and carriers too; our dogsbodies. Speculating a little further, our symbiotic relationship might also have opened up the possibility for evolutionary development at a physiological level. Like cave creatures that lose pigmentation and in which eyesight atrophies to favour greater tactile sense or sonar 30, we likewise might have reduced acuity in those senses we needed less, as the dogs compensated for our loss, which might then have reset our brains to other tasks. Did losses in our faculties of smell and hearing enable more advanced dexterity and language skills? Did we perhaps also lose our own snarls to replace them with smiles?

I shan’t say much more about wolves, except that we know from our close bond with dogs that they are affectionate and loyal creatures. So why did we vilify them as the “big, bad wolf”? My hunch is that they represent symbolically, something we have lost, or perhaps more pertinently, that we have repressed in the process of our own domestication. In a deeper sense, this psychological severance involved our alienation from all of nature. It has caused us to believe, like Hobbes, that all of nature is nothing but rapacious appetite, red in tooth and claw, and that morality must therefore be imposed upon it by something other; that other being human rationality.

Our scientific understanding of wolf behaviour has been radically overturned. Previously accepted beliefs that wolves compete for dominance by becoming alpha males or females turn out to be largely untrue. Or at least this happens only if unrelated wolves are kept in captivity. In all cases where wolves are studied in their natural environment, the so-called ‘alpha’ wolves are just the parents – in other words, wolves form families just like we do:

*

One school views morality as a cultural innovation achieved by our species alone. This school does not see moral tendencies as part and parcel of human nature. Our ancestors, it claims, became moral by choice. The second school, in contrast, views morality as growing out of the social instincts that we share with many other animals. In this view, morality is neither unique to us nor a conscious decision taken at a specific point in time: it is the product of gradual social evolution. The first standpoint assumes that deep down we are not truly moral. It views morality as a cultural overlay, a thin veneer hiding an otherwise selfish and brutish nature. Perfectibility is what we should strive for. Until recently, this was the dominant view within evolutionary biology as well as among science writers popularizing this field. 31

These are the words of Dutch primatologist Frans de Waal, who became one of the world’s leading experts in chimpanzee behaviour. Based on his studies, de Waal applied the term “Machiavellian intelligence” to describe the variety of cunning and deceptive social strategies used by chimps. A few years later, however, de Waal came across their and our pygmy cousins the bonobos that were also captive in a zoo in Holland, and says they had an immediate effect on him:

“[T]hey’re totally different. The sense you get looking them in the eyes is that they’re more sensitive, more sensual, not necessarily more intelligent, but there’s a high emotional awareness, so to speak, of each other and also of people who look at them.” 32

Sharing a common ancestor with bonobos and chimps, humans are in fact equally closely-related to both species, and interestingly when de Waal was asked do you think we’re more like bonobo or chimp he replied:

“I would say there are people in this world who like hierarchies, they like to keep people in their place, they like law enforcement, and they probably have a lot in common, let’s say, with the chimpanzee. And then you have other people in this world who root for the underdog, they give to the poor, they feel the need to be good, and they maybe have more of this kinder bonobo side to them. Our societies are constructed around the interface between those two, so we need both actually.” 33

De Waals and others who have studied primates are often astonished by the kinship with our own species. When we look deep into the eyes of chimps, gorillas, or even those of our dogs, we find ourselves reflected in every way. It’s not hard to fathom where morality came from, and the ‘veneer theory’ of Hobbes reeks of a certain kind of religiosity, infused with a deep insecurity born of the hardship and terrors of civil strife.

*

New scientific studies are proving that primates, elephants, and other mammals including dogs also show empathy, cooperation, fairness and reciprocity. That morality is an aspect of nature. Here Frans de Waal shares some surprising videos of behavioral tests that show how many of these moral traits all of us share:

*

II Between two worlds

I was of three minds,

Like a tree

In which there are three blackbirds

— Wallace Stevens 35

*

Of all the creatures on earth, apart from a few curiosities like the kangaroo and giant pangolin, or some species of long-since extinct dinosaurs, only the birds share our bipedality. The adaptive advantage of flight is so self-evident that there’s no need to ponder why the forelimbs of birds morphed into wings, but the case for humans is more curious. Why it was that about four million years ago, a branch of hominids chose to stand on two legs rather than four, enabling them to move quite differently from our closest living relatives (bonobos and chimps) with all of the physiological modifications this involved, still remains a mystery. But what is abundantly clear and beyond all speculation is that this single evolutionary change freed up our hands for purposes no longer restricted by their formative locomotive demands, and that having liberated our hands, not only did we become supreme manipulators of tools, but this sparked a parallel growth in intelligence, causing us to become supreme manipulators per se – the very etymological root of the word coming from ‘man-’ meaning ‘hand’ of course.

With our evolution as manual apes, humans also became constructors, and curiously here is another trait that we have in common with many species of birds. That birds are able to build elaborate structures to live in is indeed a remarkable fact, and that they necessarily achieve this by organising and arranging the materials using only their beaks is surely more remarkable again. Storks with their ungainly bills somehow manage to arrange large piles twigs so carefully that their nests often overhang impossibly small platforms like the tips of telegraph poles. House martins construct wonderfully symmetrical domes just by patiently gluing together globules of mud. Weaver birds, a range of species similar to finches, build the most elaborate nests of all, and quite literally weave their homes from blades of grass. How they acquired this ability remains another mystery, for though recent studies have found that there is a degree of learning involved in the styles and manner of construction, this general ability of birds to construct nests is an innate one. According to that throwaway term, they do it ‘by instinct’. By contrast, in one way or another, all human builders must be trained. As with so much about us, all our constructions are therefore cultural artefacts.

*

With very few exceptions, owls have yellow eyes. Cormorants instead have green eyes. Moorhens and coots have red eyes. The otherwise unspectacular satin bowerbird has violet eyes. Jackdaws sometimes have blue eyes. Blackbirds have extremely dark eyes – darker even than their feathers – jet black pearls set within a slim orange annulus which neatly matches their strikingly orange beaks. While eye colour is common to birds within each species, the case is clearly different amongst humans, where eye colour is one of a multitude of variable physical characteristics including natural hair and skin colour, facial characteristics, and height. Nonetheless, as with birds and other animals where there is significant uniformity, most of these colourings and other identifying features are physical expressions of the individual’s genetic make-up or genotype; an outward expression of genetic inheritance known technically as the phenotype.

Interestingly, for a wide diversity of species, there is an inheritance not only of morphology and physiology but also of behaviour. Some of these behavioural traits may then act in turn to shape the creature’s immediate environment – so the full phenotypic expression is often observed to operate outside and far beyond the body of the creature. These ‘extended phenotypes’ as Dawkins calls them are discovered within such wondrous but everyday structures as spider’s webs, delicate tube-like homes formed by caddis fly larvae, the larger scale constructions of beaver’s dams and of course bird’s nests. It is reasonable therefore to speculate on whether the same evolutionary principle applies to our human world.

What, for instance, of our own houses, cars, roads, bridges, dams, fortresses, cathedrals, systems of knowledge, economies, music and other works of art, languages…? Once we have correctly located our species as just one of amongst many, existing at a different tip of an otherwise unremarkable branch of our undifferentiated evolutionary tree of life, why wouldn’t we judge our own designs as similarly latent expressions of human genes interacting with their environment? Indeed, Dawkins addresses this point directly and points out that tempting as it may be, such broadening of the concept of phenotype stretches his ideas too far, since, to offer his own example, scientific justification must then be sought for genetic differences between the architects of different styles of buildings! 36

In fact, the distinction here is clear: artefacts of human conception which can be as wildly diverse as Japanese Noh theatre, Neil Armstrong’s footprints on the moon, Dadaist poetry, recipes for Christmas pudding, TV footage of Geoff Hurst scoring a World Cup hat-trick, and as mundane as flush toilets, or rarefied as Einstein’s thought experiments, are all categorically different from such animal artefacts as spider’s webs and beaver’s dams. They are patterns of culture not nature. Likewise, all human behaviour right down to the most ephemeral including gestures, articulations and tics, is profoundly patterned by culture and not fully shaped only by pre-existing and underlying patterns within our human genotypes.

Vocabulary – another human artefact – makes this plain. We all know that eggs are ‘natural’ whereas Easter eggs are distinguishable as ‘artificial’, and that the eye is ‘natural’ while cameras are ‘technological’ with both of our antonyms deriving roots in words for ‘art’. Which means that while ‘nature’ is a strangely slippery noun that in English points to a whole host of interrelated objects and ideas, it is found nonetheless that throughout other languages equivalent words do exist to distinguish our manufactured worlds – of arts and artifice – from the surrounding physical world comprised solely of animals, plants and landscapes. A reinvention of this same word-concept that occurs for a simple yet important reason: the difference it labels is inescapable.

*

As a species, we are incorrigibly anthropomorphising; constantly imbuing the world with our own attributes and mores. Which brings up a related point: what animal besides the human is capable of reimagining things in order to make them conform to any preconceived notion of any kind? Dogs may mistake us as other dogs – although I doubt this – but still we are their partners within surrogate packs, and thus, in a sense, surrogate dogs. But from what I know of dogs, their world is altogether more direct. Put simply it is… stick chasing… crap taking… sleep sleeping… or (best of all) going for a walk, which again is more straightforwardly being present on an outdoor exploration! In short, dogs live so close to the passing moment, because they have nowhere else to live. Yet humans mostly cannot. Instead we drift in and out of our past or in anticipation of our future. Recollections and goals fill our thoughts repeatedly and it is exceedingly difficult to attend fully to the present.

Moreover, for us the world is nothing much without other humans. Without culture, any world worthy of the name is barely conceivable at all, since humans are primarily creatures of culture. Yes, there would still be the wondrous works of nature, but no art beyond, and no music except for the occasional bird-song and the wind in the trees: nothing but nothing beyond the things-in-themselves that surround us, and without other humans, no need to communicate our feelings about any of this. In fact, there could be no means to communicate at all, since no language could ever form in such isolation. Instead, we would float through a wordless existence, which might be blissful or grindingly dull, but either way our sense impressions and emotions would remain unnamed.

So it is extremely hard to imagine any kind of world without words, although such a world quite certainly exists. It exists for animals and it exists in exceptional circumstances for humans too. The abandoned children who have been nurtured by wild animals (very often wolves) provide an uneasy insight into this world beyond words. So too, for different reasons, do a few of the profound and congenitally deaf. On very rare occasions, these children have gone on to learn how to communicate, and when this happens, what they tell us is just how important language is.

*

In his book Seeing Voices, neurologist Oliver Sacks, describes the awakening of a number of remarkable individuals. One such was Jean Massieu. Almost without language until the age of fourteen, Massieu had become a pupil at Roch-Ambroise Cucurron Sicard’s pioneering school for the deaf. Astonishingly, he went on to become eloquent in both sign language and written French.

Based on Sicard’s original account, Sacks examines Massieu’s steep learning curve, and sees close similarities to his own experience with a deaf child. By attaching names to objects in the pictures Massieu would draw, Sicard was able to open the young man’s eyes. Labels that, to begin with, left his pupil “utterly mystified” were then abruptly understood as Massieu had “got it”. And here Sacks emphasises how Massieu understood not just an abstract connection between the pencil lines of his own drawing and the seemingly incongruous additional strokes of his tutor’s labels, but, almost instantaneously, he also recognised the value of such a tool: “… from that moment on, the drawing was banished, we replaced it with writing.”

The most magical part of Sacks’ retelling comes in the description of Massieu and Sicard’s walks together through the woods. “He didn’t have enough tablets and pencils for all the names with which I filled his dictionary, and his soul seemed to expand and grow with these innumerable denominations…” Sicard later wrote.

Massieu’s epiphany brings to mind the story of Adam who was set the task of naming of all the animals in Eden, and Sacks tells us:

“With the acquisition of names, of words for everything, Sicard felt, there was a radical change in Massieu’s relation to the world – he had become like Adam: ‘This newcomer to earth was a stranger on his own estates, which were restored to him as he learned their names.’” 37

This gift for language quite obviously sets us most apart from other creatures. Not that humans invented language from scratch, of course, since it grew up both with us and within us: one part phenotype and one part culture. It evolved within other species too, but for reasons unclear, we excelled, and as a consequence became adapted to live in two worlds, or as Aldous Huxley preferred to put it: we have become “amphibian”, in that we simultaneously occupy “the given and the home-made, the world of matter, life and consciousness and the world of symbols.” 38

Words and symbols enable us to relate the present to the past. We reconstruct it or perhaps reinvent it. Likewise with language we can envisage a future. This moves us outside Time. So it helps us to heal past wounds and to prepare for future events. Indeed, it anchors the world and our place within it, but, and correspondingly, it also detaches us from the immediate present.

For whereas many living organisms exist entirely within their immediate physical reality, human beings occupy a parallel ideational space where we are almost wholly embedded in language. Now think about that for a moment… no really do!

Stop reading this.

Completely ignore this page of letters, and silence your mind.

Okay, close your eyes and turn your attention to absolutely anything you like and then continue reading…

So here’s my question: when you were engaged in your thoughts, whatever you thought about, did you use words at all? Very likely you literally “heard” them: your inner voice filling the silence in its busy, if generally unobtrusive and familiar way. Pause again and now contemplate the everyday noise of being oneself.

Notice how exceedingly difficult it is to exist if only for a moment without any recourse to language.

Perhaps what Descartes really meant to say was: I am therefore I think!

For as the ‘monkey mind’ goes wandering off, instantly the words have crept back into our mind, and with our words comes this detachment from the present. Every spiritual teacher knows this, of course, recognising that we cannot be wholly present to the here and now while our mind darts off to visit memories, wishes, opinions, descriptions, concepts and plans: the same memories, wishes, opinions, descriptions, concepts and plans that gave us an evolutionary advantage over our fellow creatures. The sage also understands how the true art of meditation cannot involve any direct effort to silence our excitable thoughts, but only to ignore them. Negation of thought is not thinking no thought; it is not thinking at all: no words!

It is evident therefore how in this essential way we are indeed oddly akin to amphibious beings since we occupy and move between two distinct habitats. Put differently, our sensuous, tangible outside world of thinginess (philosophers sometimes call this ‘sense data’) is totally immersed within the inner realms of language and symbolism. So when we see a blob with eight thin appendages we very likely observe something spider-like. If we hate spiders then we are very likely to recoil from it. If we have a stronger aversion then we will recoil even after we are completely sure that it’s just a picture of a spider or, in extreme cases, a tomato stalk. On such occasions, our feelings of fear or disgust arise not as the result of failing to distinguish the likeness of a spider from a real spider, but from the power of our own imagination: we literally jump at the thought of a spider.

Moreover, words are sticky. They coagulate together in streams of association and these mould our future ideas. Religion = goodness. Religion = stupidity. If we hold the first opinion then crosses and pictures of saints will automatically generate a different affect than if we hold the latter. Or how about replacing the word ‘religion’ with say ‘patriotism’: obviously our perception of the world alters in a different way. In fact, just as the pheromones in the animal kingdom cause the direct transmission of behavioural effects between members of a species, the language secreted by humans is likewise capable of directly impacting the behaviour of others.

It has become our modern tendency to suppose automatically that the arrow which connects these strikingly different domains points unerringly in one direction: that language primarily describes the world, whereas the world as such is relatively unmoved by our descriptions of it. This is basically the presumed scientific arrangement. By contrast, any kind of magical reinterpretation of reality involves a deliberate reversal of the direction of the arrow such that all symbols and language are treated as potent agents that might actively cause change within the material realm. Scientific opinion holds that this is false, and yet, on a deeply personal level, language and symbolism not only comprise the living world, but do quite literally shape and transform it. As Aldous Huxley writes:

“Without language we should merely be hairless chimpanzees Indeed, we should be something much worse. Possessed of a high IQ but no language, we should be like the Yahoos of Gulliver’s Travels—creatures too clever to be guided by instinct, too self-centred to live in a state of animal grace, and therefore condemned to remain forever, frustrated and malignant, between contented apehood and aspiring humanity. It was language that made possible the accumulation of knowledge and the broadcasting of information. It was language that permitted the expression of religious insight, the formulation of ethical ideals, the codification of laws. It was language, in a word, that turned us into human beings and gave birth to civilization.” 39

*

As I look outside my window I see a blackbird sitting on the TV aerial of a neighbouring rooftop. This is what I see, but what does the blackbird see? Obviously I cannot know for certain though merely in terms of what he senses, we know that his world is remarkably different from ours. For one thing, birds have four types of cone cells in the retinas of their eyes while we have only three. Our cone cells collect photons centred on red, green and blue frequencies and different combinations generate a range of colours that can be graphically mapped as a continuously varying two-dimensional plain of colours, however if we add another colour receptor then the same mapping requires an additional axis that extends above the plain. For this reason we might justifiably say that the bird sees colours in ways that differ not merely by virtue of the extent of the detectable range of frequencies, but that a bird’s vision involves a range of colour combinations of a literally higher dimension.

Beyond these immediate differences in sense data, there is another way in which a bird’s perceptions – or more strictly speaking its apperceptions – are utterly different from our own, for though the blackbird evidently sees the aerial, it does not recognise it as such. Presumably it sees nothing beyond a convenient metal branch to perch upon decked with unusually regular twigs. For even the most intelligent of all blackbirds is incapable of knowing more, since this is all any bird can ever understand about the aerial.

No species besides our own is capable of discovering why the aerial was actually put there, or how it is connected to an elaborate apparatus that turns the invisible signals it captures into pictures and patterns of sounds, leave aside gathering the knowledge of how metal can be manufactured by smelting rocks or the still more abstruse science of electromagnetism.

My point here is not to disparage the blackbird’s inferior intellect, since it very possibly understands things that we cannot; but to stress how we are unknowingly constrained in ways we very likely share with the bird. As Hamlet cheeks his friend: “There are more things in heaven and earth, Horatio, than are dreamt of in your philosophy.”

Some of these things – and especially the non-things! – may slip us by forever as unknown unknowns purely by virtue of their inherently undetectable nature. Others may be right under our nose and yet, just like the oblivious bird perched on its metal branch who can never consider reasons for why it is there, we too may lack any capacity even to understand that there is any puzzle at all.

*

I opened the chapter with a familiar Darwinian account of human beings as apex predators struggling for survival on an ecological battlefield; perpetually fighting over scraps, and otherwise competing over a meagre share of strictly limited resources. It is a vision of reality founded upon our collective belief in scientific materialism, and although a rather depressing vision, it has become today’s prevailing orthodoxy – the Weltanschauung of our times – albeit seldom expressed so antiseptically as it might be.